Procedural Sound

The interactive nature of video games necessitates variety in sound. Taking a simple implementation approach of having an audio asset played back in response to each unique in-game event would require a massive amount of assets to account for every possibility (Stevens, 2016).

The modification and generation of assets to solve this problem has been coined 'Procedural Sound', though its precise definition has been the subject of much debate.

Below, we identify 3 examples of games that use procedural sound, and explore why and how they have implemented this technique into their audio.

~~~~~~~~~~~~~~~~~~~~~~~

An obvious use-case for procedural sound can be found in games that employ procedural generation; in the case of Hello Games’ 2016 release No Man’s Sky (No Man’s Sky, 2016), planet biomes, creatures, starships, and more are procedurally generated (McKendrick, 2017).

For the audio team, this meant they were unable to implement and design sounds in a conventional manner, as the developers “[didn’t] know what a planet is before it’s created” (Weir, 2017), much less what it should sound like. With a total of 18 quintillion possible planets (Kharpal, 2016), leveraging parameterisation through Audiokinetic’s Wwise (Audiokinetic, n.d.) middleware to proceduralise sound was quickly identified as a solution to the challenge of creating variety. Merely 9 key parameters were used to drive a single Wwise event that handled all local and global ambiences, including weather effects (Weir, 2017):

Global biome (State)

Localised ambience switched (Switch)

Building interiors (State & RTPC)

Rain (State & RTPC)

Storm (RTPC)

Planet time (RTPC)

Creature existence (RTPC)

On procedural audio, audio director Paul Weir points out that the term is too broad, and that any form of synthesis could be seen as “procedural”. He has come up with his own definition, which he presents as “the creation of sound in real-time, using synthesis techniques such as physical modelling, with deep links into game systems” (Weir, 2017).

A prime example conforming to this definition would be the audio team's approach to crafting creature sounds; due to the variety of creatures that could be created and their pivotal contribution to the game’s fantasy of exploring alien planets, they invested time into creating a bespoke Wwise plugin they named ‘VocAlien’, which they use to generate all creature vocalisations in the game. The plugin functions similarly to a software instrument, simulating a real-time physically modelled vocal tract. Exposed design parameters such as ‘harshness’ and ‘screech’ facilitate creation and curation of unique creature archetypes, and formant filtering simulating phonemes both realistic and alien were implemented as a final step. Sound designers also crafted morph targets through MIDI performance files, which were used to shift between 27 preset states for each creature, ranging from idle to angry (Weir, 2017).

Figure 1: VocAlien MIDI control surface user interface (Weir, 2017).

Approximately a dozen creature archetypes were created to help with generalising creature vocalisations into recognisable categories like amphibians, mammals, and birds (Weir, 2017). An overt abundance of variation creates a lack of excitement in the listener; if every sound heard is completely new, then “it will be impossible to surprise [them]” (Weir, 2017). In a similar vein, the creation of completely random sounds often leads to sonically unappealing results – dialling in characteristics of sounds such as average frequency are key considerations (Fournel, 2010).

Challenges were not limited to the creation of sounds, but also their playback; the aforementioned lack of knowledge of the game’s geometry meant it was impossible to take a conventional pre-computational approach to calculating essential acoustic phenomena such as reflection, obstruction, and occlusion (Raghuvanshi and Snyder, 2014), and interactive sound rendering was outside of the game’s hardware budget (Micah T. et al., 2019). The solution was to constantly fire raycasts between sound sources and the player’s location and dynamically apply appropriate filtering through Wwise (Weir, 2017), as demonstrated in Figures 2 and 3.

Figure 2: Example of raycasts fired from a player's location informing the sound engine how sounds should be played back. The figure illustrates how vertical raycasts can be used to determine if a player is inside a cave (Weir, 2017).

Figure 3: Video clip of the game's audio director demonstrating dynamic ambience and a practical use of raycast information (Weir, 2017).

Despite the key problem of designing and implementing sound without having any references beyond exposed game parameters, the team’s utilisation of procedural sound generation both addressed their challenges and opened new avenues for creativity, such as in the form of a custom vocal synthesiser plugin.

References:

Audiokinetic (n.d.) Audiokinetic Wwise [Online]. www.audiokinetic.com. Available from: <https://www.audiokinetic.com/en/wwise/overview/> [Accessed 8 October 2024].

Fournel, N. F. (2010) Procedural Audio for Video Games: Are We There Yet? [Online]. www.gdcvault.com. Available from: <https://www.gdcvault.com/play/1012645/Procedural-Audio-for-Video-Games> [Accessed 8 October 2024].

Kharpal, A. (2016) ‘No Man’s Sky’: Would You Play a Game That Takes 584 Billion Years to Explore? [Online]. CNBC. Available from: <https://www.cnbc.com/2016/08/10/no-mans-sky-release-would-you-play-a-game-that-takes-584-billion-years-to-explore.html> [Accessed 8 October 2024].

McKendrick, I. (2017) Continuous World Generation in No Man’s Sky [Online]. YouTube. Available from: <https://youtu.be/sCRzxEEcO2Y?si=VqzLZSbIdDF1zBP9> [Accessed 8 October 2024].

Micah T., T., Anish, C., Lakulish, A. & Dinesh, M. (2009) RESound: Interactive Sound Rendering for Dynamic Virtual Environments. In: Proceedings of the 17th ACM International Conference on Multimedia, October 19, 2009. pp. 271–280.

No Man’s Sky (2016) London: Hello Games [Game] [PlayStation 4].

Raghuvanshi, N. & Snyder, J. (2014) Parametric Wave Field Coding for Precomputed Sound Propagation. ACM Transactions on Graphics, 33 (4) July, pp. 1–11.

Weir, P. (2017) The Sound of ‘No Man’s Sky’ [Online]. gdcvault.com. Available from: <https://gdcvault.com/play/1024067/The-Sound-of-No-Man> [Accessed 8 October 2024].

Stevens, R. (2016) Why Procedural Game Sound Design Is so Useful – Demonstrated in the Unreal Engine. A Sound Effect, 18 January [Online blog]. Available from: <https://www.asoundeffect.com/procedural-game-sound-design/> [Accessed 8 October 2024].

JOES SECTION

How do you create a soundscape that keeps up with such a broad, open-world and particularly chaotic gameplay experience such as GTAV without repeating itself? The answer is through an in-house game engine: Rockstar Advanced Game Engine, or RAGE for short. Developed and designed over time with Rockstars open world games since 2004, it allows game designers to work on each game within the same sets of tools uniquely tailored to the type of games the company specialises in. (Grant Theft Auto V, 2013) (Lanley, 2022) (GDC Vault, 2014) (Rockstarnexus, 2015)

The RAGE engine prioritises modular asset design, granting a dynamic, varied sound experience for players while reducing memory usage. Instead of using one big sound file for each individual car crash or explosion, a series of smaller assets are combined to make one whole noise (For an explosion say, the crack, boom, tail and thump of an explosion). An array of these individual sounds can be programmed to be picked at random, varying up the explosion with many different combinations. (Andersen, 2016)

This economic approach helped decrease the budget for vehicle audio compared to GTAIV - cut from 20% percent to 15%. Modular approaches to sound design tend to help reduce budgets by reducing the number of time and resources to spend on acquiring physical assets. (Nair, 2014)

The engine uses modular sound hierarchies to judge an appropriate sound for objects actions in-game over 31 different sound types (Random sounds, multi-channel sounds, envelope sounds, etc.) which allows designers to implement an asset such as a bird noise and easily implement it across the worlds different attenuation settings, sound position, time of day and even the variables of different vehicles, promoting a streamlined, creative working environment. This helps create a consistent, realistic soundscape which “supports the verisimilitude of the experience” of gameplay. (Raybould; Stevens, 2016, p. 227)

Vehicles in GTAV are structured in layers of different sounds and variations – The type of engine, the gear, the engine revving up or ticking as the engine cools down, a bassier sound beneath a freeway, etc. The vehicle engine sound uses a granular engine as opposed to GTAIV’s looping system. Granular systems construct long sounds from much smaller sounds, from 1ms to 100ms (or longer). allow more subtle, in-depth, and nuanced modulation to audio through in real time. (Creasey, 2017, p.616) This allows the game to match the audio on-screen more convincingly and apply filters and variations effected by vehicle movement – aero and traction sounds, the cars bouncing over surfaces, speeding up and slowing down and so on.

There is an enormous range of collision wave data – the team prioritises each sound fitting and open to variation, and on highways, the effort was made not just to create a generic ambient background noise but include the in-game sound of any vehicle passing by. This nuanced, detailed audio design is what draws a player into the world beyond engagement and engrossment and into immersion; feeling you are genuinely part of the world. (Brown, Cairns, 2006)

Citations:

Brown, E & Cairns, P. (2004) 'A grounded investigation of game immersion', pp. 1297--1300.

Creasey (2017) Audio Processes: Musical Analysis, Modification, Synthesis and Control. New York; Oxon, Routledge.

DestructionNation (2018) No Seatbelt Car Crashes – GTA 5 Ragdolls Compilation (Euphoria Physics). Uploaded 29 June. Available at: https://youtu.be/KFv-mbPgaG4 (Accessed: 8 October 2024)

GDCVault (2014) GDC Vault – The Sound of Grant Theft Auto V. Available at: https://www.gdcvault.com/play/1020247/The-Sound-of-Grand-Theft (Accessed 8 October 2024)

Grand Theft Auto V. (2013) Playstation 3; Xbox 360 [Game] New York, NY: Rockstar Games.

Lanley, H. (2022) The tech that built an empire: how Rockstar created the world of GTA 5 | TechRadar. Available at: https://www.techradar.com/news/gaming/the-tech-that-built-an-empire-how-rockstar-created-the-world-of-gta-5-1181281 (Accessed 8 October 2024)

Raybould, D. & Stevens, R. (2016) Game Audio Implementation: A Practical Guide Using the Unreal Engine. Burlington, Oxon: Focal Press.

Rockstarnexus (2015) The Sound of Grand Theft Auto V. Uploaded 18 April. Available at: https://youtu.be/PqPaP_09Pwg (Accessed: 8 October 2024)

Nair, N. (2014) What’s the Deal With Procedural Game Audio? Available at: https://designingsound.org/2014/10/31/whats-the-deal-with-procedural-game-audio/ (Accessed 8 October 2024)

3 big concepts

-Physics based sound (Randomisation through design layers)

-Randomisation through component layers

-Dynamic loading per area-“contextual impression” audio

Resident Evil 7

Developing systems over the franchise

Physics Based Sound

Since the sounds are now not directly being called off the animation of the character rather than the movement of all the limbs separately, this enabled the changing of, and addition of, new animations to the game without needing to rehook up the connections. The movements were able to be read from the animation components rather than the compostion. (Kojima et al. 2017)

Planet Coaster

To be more like the variation in real life

Most games, like sports, are about skill and mastery, so the player will be repeating similar actions again and again, and consequently they will be hearing the same sounds again and again. The trouble is that we’re very sensitive to this kind of repetition, since it just doesn’t happen in real life. (Stevens, 2016)

To lower the work involved in simulating real life

'The obvious answer is just to have loads and loads of sounds but it would be far too much work to create 30-40 versions of every sound in the game (plus there are obviously limitations as to how much we can fit on a disk!).' (Stevens 2016)

A new starting point

“What is the player?”

The idea of the player, and therefore the main protagonist in and rpg is unusual to debate. There’s usually a 1st person player to experience vicariously though the game character whatever is in that worldscape. However, within manager games such as Planet Coaster that notion is more abstract, and also becomes an important factor for determining the systems to place the “player” at the centre of the mix

'is it the camera,

is it the crowd,

is it the progression of building your park'

(Florianz, 2017)

Allowing the player to determine the core sounds needed

Since in this example a lot of sounds are based in dynamic loops, there can be many game elements where the sound will stay on, on top of all of the one shots, such as rollecoaster loops, character loops with dynamic emotional responses, crowd summarising layers, ambiences and perhaps ride music. This makes it important to be able to limit what doesn’t need to be present and also gives more autonomy to the player. (Florianz, 2017)

Loop layers increasing

- Rollercoasters

- Crowd emotions

- Ambiences

- Music

'the park that people create has to inform the audio, you can’t just play a background loop with a crowd in it, because someone may have decided to not have any crowds in it....

it’s set in an open world so it means it starts with nothing and ends up with possibly everything “you can never predict what’s going to happen' (Florianz 2017)

Designing in smaller components per item

Designing in scene components for the mix

Much of the talking it about how everything’s broken down into it’s smaller/smallest parts to then be rebuilt as a different whole soundscape base. This seems to generally be across a single item broken to components, and then also scaled up to the game mix, where designed zones are broken down into scene sound contributors that give an overall mix impression. This is how the variation quantities rise exponentially to cause challenges to the data quantities loaded. (Stevens, 2016) (Florianz, 2017)

Does Procedural really translate to having the whole sound object only established at runtime?

DATA LOAD CONSIDERATIONS

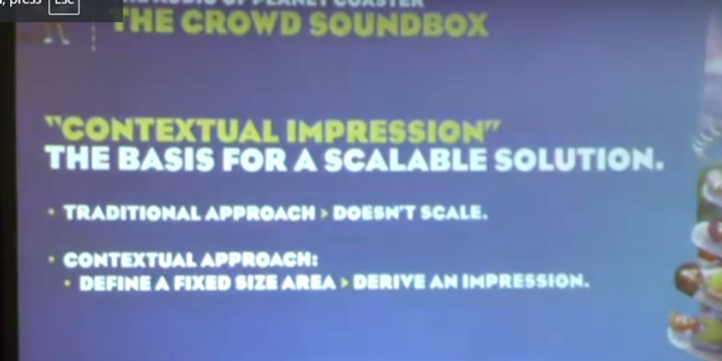

Loading data locationally in contrast to per asset

With the density and quantity of sounds being fully generated per user ‘sound box’, the gameplay is similar to that of a ‘fluid simulation’. This means that treating the world as individual objects and for example having the sounds driven from animation loading would put too much strain on the system data by being called for all objects. To counteract this huge load on data, treating audio as loading per area range dictated by the player rather than by asset type allows for sound assets to not have their loading in consideration unless specifically called upon. (Florianz, 2017).

'we had to think of something different that one-to-one relationship an object with an emitter on it and then basically looking at animations to see what sounds to play that wouldn't work and the other problem with that is well if you're looking for stuff in in a certain range you can't look for stuff outside that range so that system couldn't do kind of distance either and well with the camera and with all those objects we'd have to fix kind of mix problems transition problems so we we turned that around a little bit' (Florianz, 2017)

SOUNDBOXING

GRID METHOD

'what we do is we count the grid cell and we say oh there's two people in this grid cell and we count another one oh there's four people in total now and then over the course of an entire frame we spread out this work and we count all the people that are in the entire grid and that system is called the crowd sound' (Florianz, 2017)

- Cameras

- Distances

- Occlusions

- Assets

- Impression

RESEARCHING "CONTEXTUAL IMPRESSIONS" REAL LIFE RECORDINGS

Summary

Goals

- To be more responsive the players actual actions

- To be able to adapt to player centric choices

- To be able to handle to system weight on runtime generation of complex audio with many components

3 big concepts

-Physics based sound

-Randomisation through design layers

-Randomisation through component layers

Strategies

- Dynamic loading per area

-“Contextual impression”

Challenges

-Data load

-Asset quantity once split into components for all

-Mixing without pre-establised bases

References:

Florianz, Matthew. (2017, released 2022) The Challenge Of Creating Audio For Planet Coaster - Develop Conference 2017 Game Audio Talk [online]

Available at: <https://www.youtube.com/watch?v=vI56yo2RZQg>

[Accessed 6 Oct. 2024].

Kojima, Kenji., Morimoto, Akyuki., Usami, Ken. (2017, released 2019) The Sound of Horror Resident Evil 7: Biohazard [online]

Available at: <https://www.youtube.com/watch?v=By_dCUApwkw>

[Accessed 6 Oct. 2024].

Pasquier, Philippe. (2022) Deep Dive: A framework for generative music in video games [online]

Available at: <https://www.gamedeveloper.com/audio/deep-dive-generative-music-in-video-games>

[Accessed 6 Oct. 2024].

Stevens, Richard. (2016). Why Procedural Game Sound Design is so useful – demonstrated in the Unreal Engine [online]

Available at: <https://www.asoundeffect.com/procedural-game-sound-design/>

[Accessed 6 Oct. 2024].